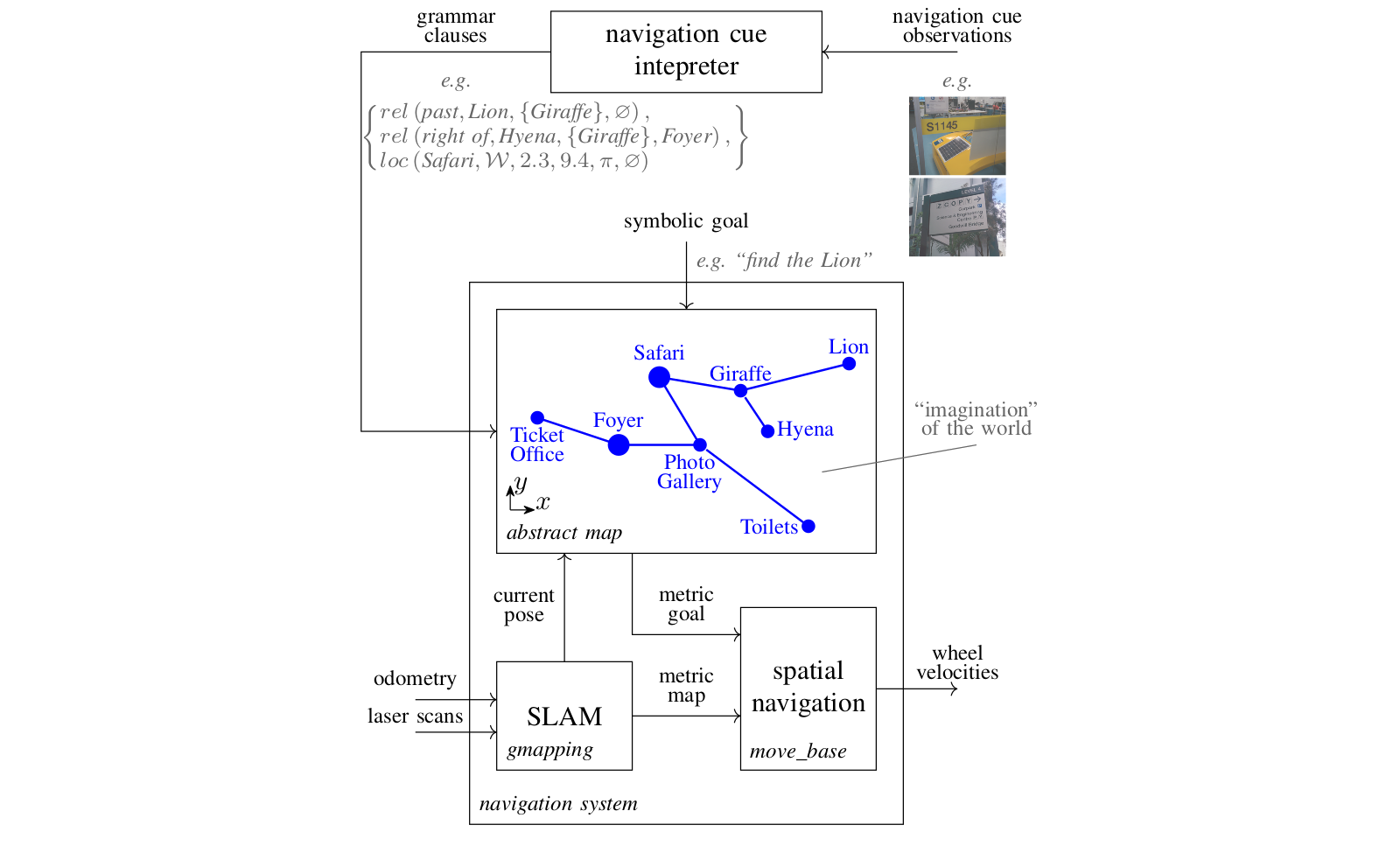

By using the abstract map, a robot navigation system is able to use symbols to purposefully navigate in unseen spaces. Here we show some videos of the abstract map in action, and link to the open source code we make available with the publication of results. The code includes a Python implementation of the abstract map, a 2D simulator for reproducing the zoo experiments, and a mobile phone app for playing symbolic spatial navigation games with a phone.

Quick links

- Publication in IEEE Transactions on Cognitive and Developmental Systems: https://doi.org/10.1109/TCDS.2020.2993855

- Pre-print on arXiv: https://arxiv.org/abs/2001.11684

- Python implementation of the abstract map: https://github.com/btalb/abstract_map

- 2D simulator for recreating the abstract map zoo experiments: https://github.com/btalb/abstract_map_simulator

- Mobile phone application used by human participants in the zoo experiments: https://github.com/btalb/abstract_map_app

Open source abstract map resources

The first open source contribution provided is Python code for a full implementation of the abstract map available here. The implementation features:

- a novel dynamics-based malleable spatial model for imagining unseen spaces from symbols (which includes simulated springs, friction, repulsive forces, & collision models)

- a visualiser & text-based commentator for introspection of your navigation system (both shown in videos on the repository website)

- easy ROS bindings for getting up & running in simulation or on a real robot

- tag readers & interpreters for extracting symbolic spatial information from AprilTags

- configuration files for the zoo experiments performed on GP-S11 of QUT’s Gardens Point campus (see the paper for further details)

- serialisation methods for passing an entire abstract map state between machines, or saving to file

We also provide a configured 2D simulator for reproducing the zoo experiments in a simulation of our environment. The simulator package includes:

- world & launch files for a stage simulation of the GP-S11 environment on QUT’s Gardens Point campus

- a tool for creating simulated tags in an environment & saving them to file,

- launch & config files for using the move_base navigation stack with gmapping to explore unseen simulated environments

Lastly, we provide code for the mobile application used by human participants in the zoo experiments. The phone application, created with Android Studio, includes the following:

- opening screen for users to select experiment name & goal location

- live display of the camera to help users correctly capture a tag

- instant visual feedback when a tag is detected, with colouring to denote whether symbolic spatial information is not the goal (red), navigation information (orange), or the goal (green)

- experiment definitions & tag mappings are creatable via the same XML style used in the abstract_map package

- integration with the native C AprilTags using the Android NDK

We hope these tools can help people engage with our research, and more importantly they can aid future research into the problem of developing robot that utilise symbols in their navigation processes.

Videos of the abstract map in action

Below are videos for five different symbolic navigation tasks completed as part of the zoo experiments described in our paper. In the experiments the robot was placed in an environment with no existing map, and given a symbolic goal like “find the lion”. Could the abstract map, using only the symbolic spatial information available from the AprilTags in the environment, successfully find the goal?

Human participants who had never visited the environment before were given the same task, with the results showing the abstract map enables symbolic navigation performance comparable to humans in unseen built environments.

Find the lion

Find the kingfisher

Find the polar bear

Find the anaconda

Find the toilets

Acknowledgements & citing our work

This work was supported by the Australian Research Council’s Discovery Projects Funding Scheme under Project DP140103216. The authors are with the QUT Centre for Robotics.

If you use the abstract map in your research, or for comparisons, please kindly cite our work:

@ARTICLE{9091567,

author={B. {Talbot} and F. {Dayoub} and P. {Corke} and G. {Wyeth}},

journal={IEEE Transactions on Cognitive and Developmental Systems},

title={Robot Navigation in Unseen Spaces using an Abstract Map},

year={2020},

volume={},

number={},

pages={1-1},

keywords={Navigation;Robot sensing systems;Measurement;Linguistics;Visualization;symbol grounding;symbolic spatial information;abstract map;navigation;cognitive robotics;intelligent robots.},

doi={10.1109/TCDS.2020.2993855},

ISSN={2379-8939},

month={},}

}

Further information

Please see our paper for full details of the abstract map’s role in robot navigation systems & the zoo experiments shown above.

If you want more information about the abstract map see our related publications, or you can contact the authors via email: b.talbot@qut.edu.au